Introduction

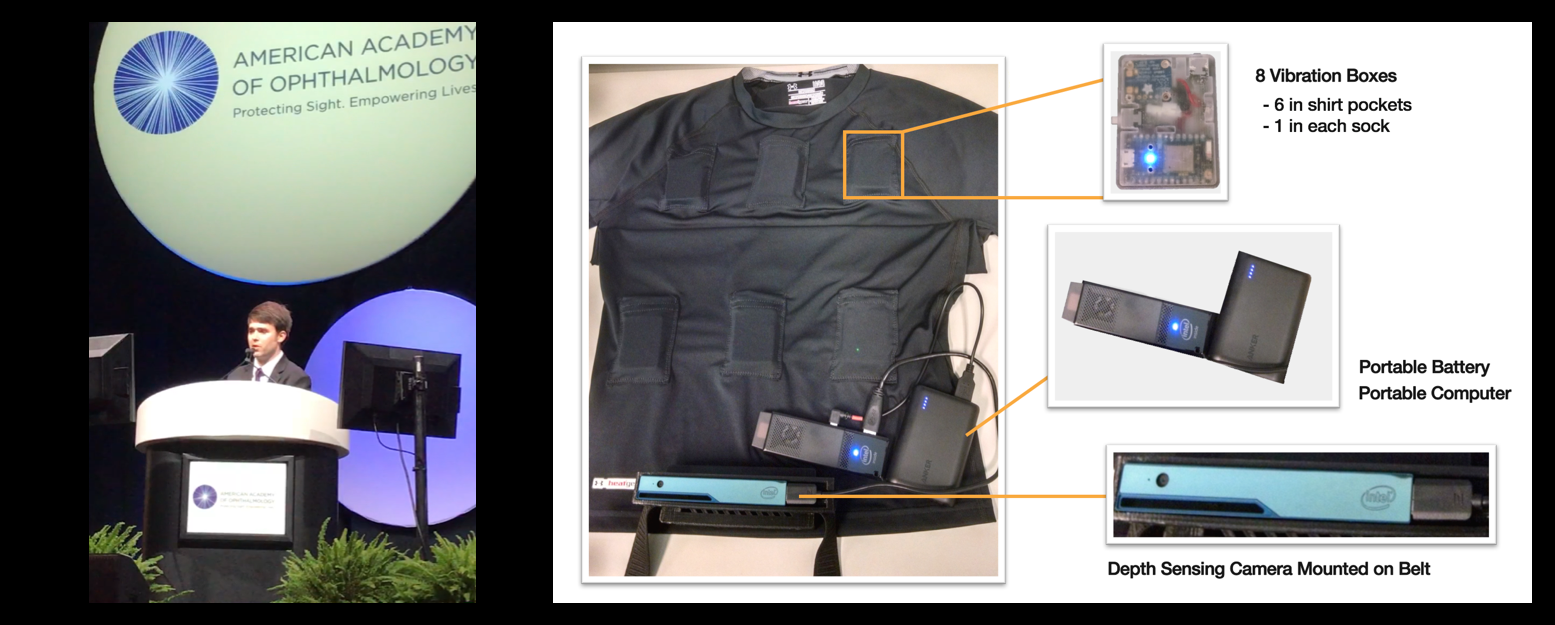

I recently gave a presentation at “Retina Day,” a meeting of retina specialists that occurs in conjunction with the Annual Meeting of the American Academy of Ophthalmology. I know many weren’t able to be there, so I want to briefly share a few highlights from my talk, which was about an exciting project we at the University of Iowa have been working on together with Intel Corporation to provide our patients with a non-invasive wearable enhancement for advanced peripheral visual field loss.

I recently gave a presentation at “Retina Day,” a meeting of retina specialists that occurs in conjunction with the Annual Meeting of the American Academy of Ophthalmology. I know many weren’t able to be there, so I want to briefly share a few highlights from my talk, which was about an exciting project we at the University of Iowa have been working on together with Intel Corporation to provide our patients with a non-invasive wearable enhancement for advanced peripheral visual field loss.

As you know, wearable electronic technology has become an exciting and active area of consumer electronics. Health and medical wearables are commonplace in today’s world and include the Fitbit and other watch-like devices that monitor heart rate, steps, sleep cycles, and other physiologic functions.

Wearable Tech in Ophthalmology

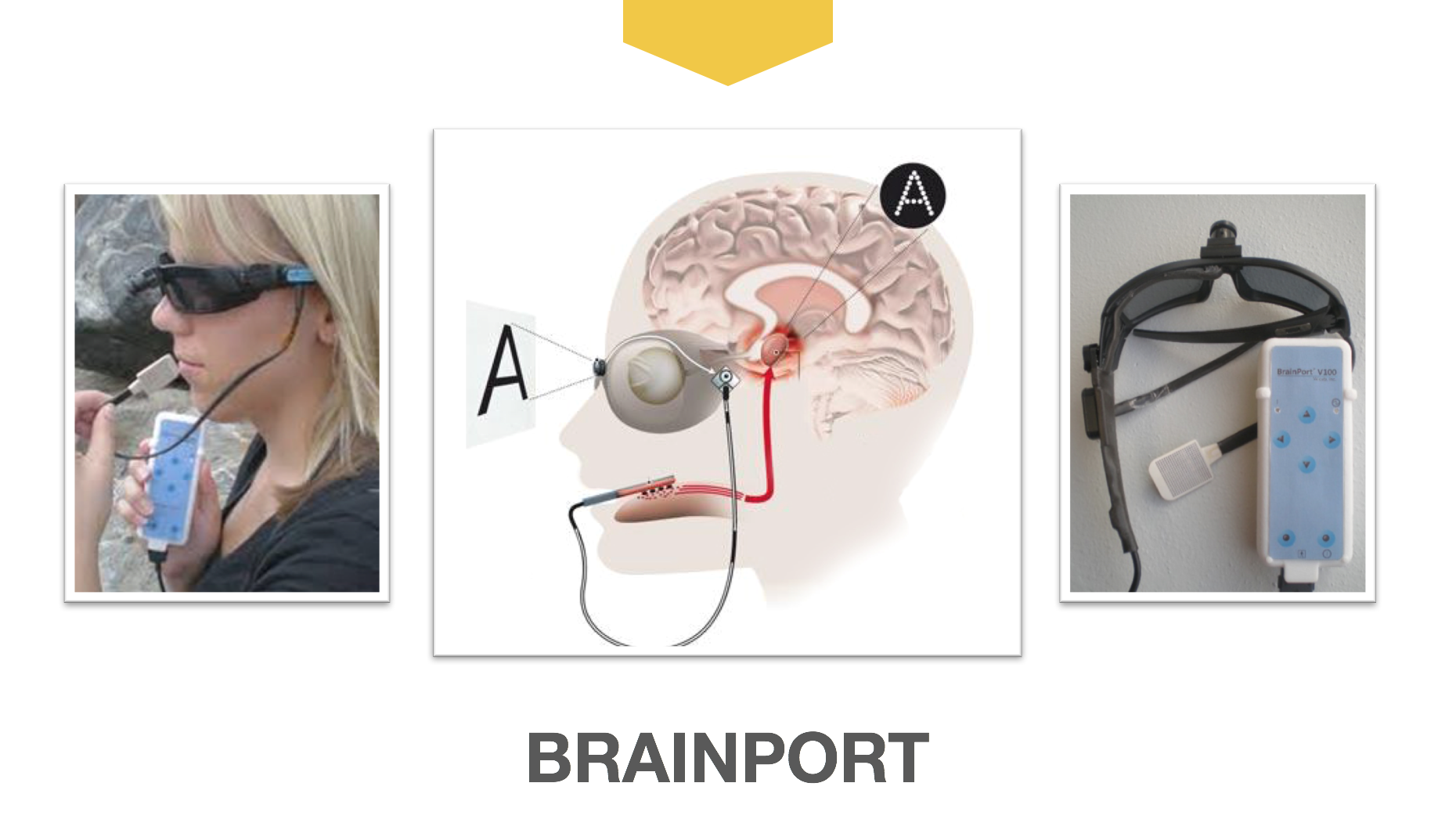

A similar “wearable” device for our visually impaired patients is the FDA-approved Brainport, which uses a spectacle-mounted camera to modify a 400 electrode array placed on the user’s tongue in order to simulate central vision.

Other options for those with severe visual impairment include the Argus II, an FDA-approved epiretinal implant, and the Iris II bionic vision system, an EU-approved subretinal implant, though these devices require surgical intervention and may be difficult to modify as technology improves in the future. Another limitation of these devices is that they are designed as substitutes for central vision.

But what about individuals with retinitis pigmentosa, advanced glaucoma, or other conditions that have resulted in the loss of their peripheral vision? These individuals must be constantly scanning their environment, and they often require sighted guides or canes to safely navigate their surroundings and feel comfortable in social situations as simple as meeting coworkers in a hallway.

But what about individuals with retinitis pigmentosa, advanced glaucoma, or other conditions that have resulted in the loss of their peripheral vision? These individuals must be constantly scanning their environment, and they often require sighted guides or canes to safely navigate their surroundings and feel comfortable in social situations as simple as meeting coworkers in a hallway.

Collaboration with Intel Corp

The man seen in the image below is Darryl Adams, a project manager at Intel Corporation who was diagnosed with Retinitis Pigmentosa about 30 years ago. At the 2015 consumer electronics show, Darryl and Intel CEO Brian Krzanich demo-ed a wearable prototype which uses Intel RealSense 3D camera technology to simulate one’s peripheral visual field by causing various boxes in the user’s undergarment to vibrate as objects enter distinct areas of the camera’s view, which may otherwise go undetected to the someone with poor peripheral vision.  In order to promote R&D of this new device, Intel publicly released the software source code and hardware specifications in early 2016, and since April, we have been collaborating with Intel engineers to improve and further develop this device.

In order to promote R&D of this new device, Intel publicly released the software source code and hardware specifications in early 2016, and since April, we have been collaborating with Intel engineers to improve and further develop this device.

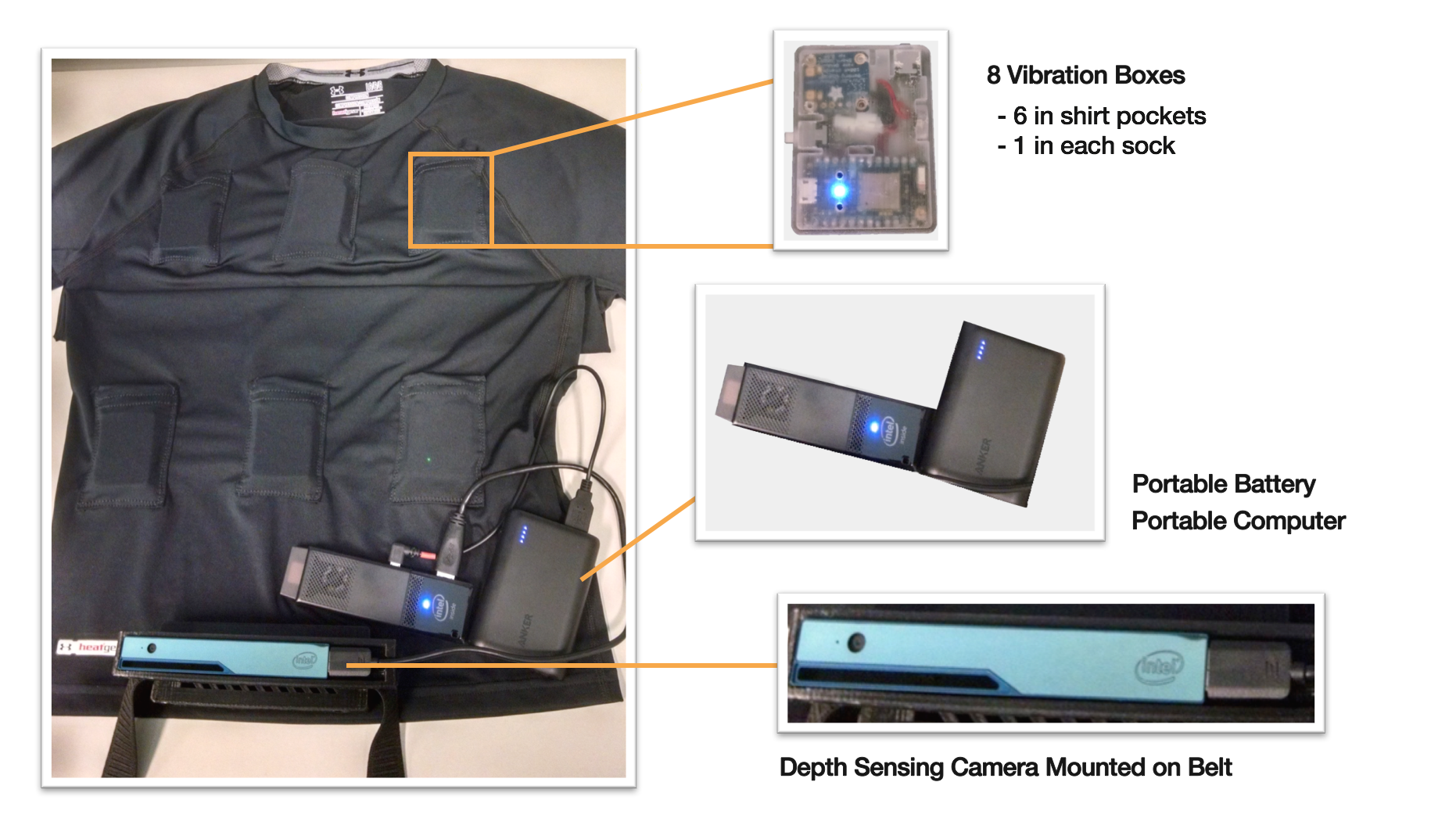

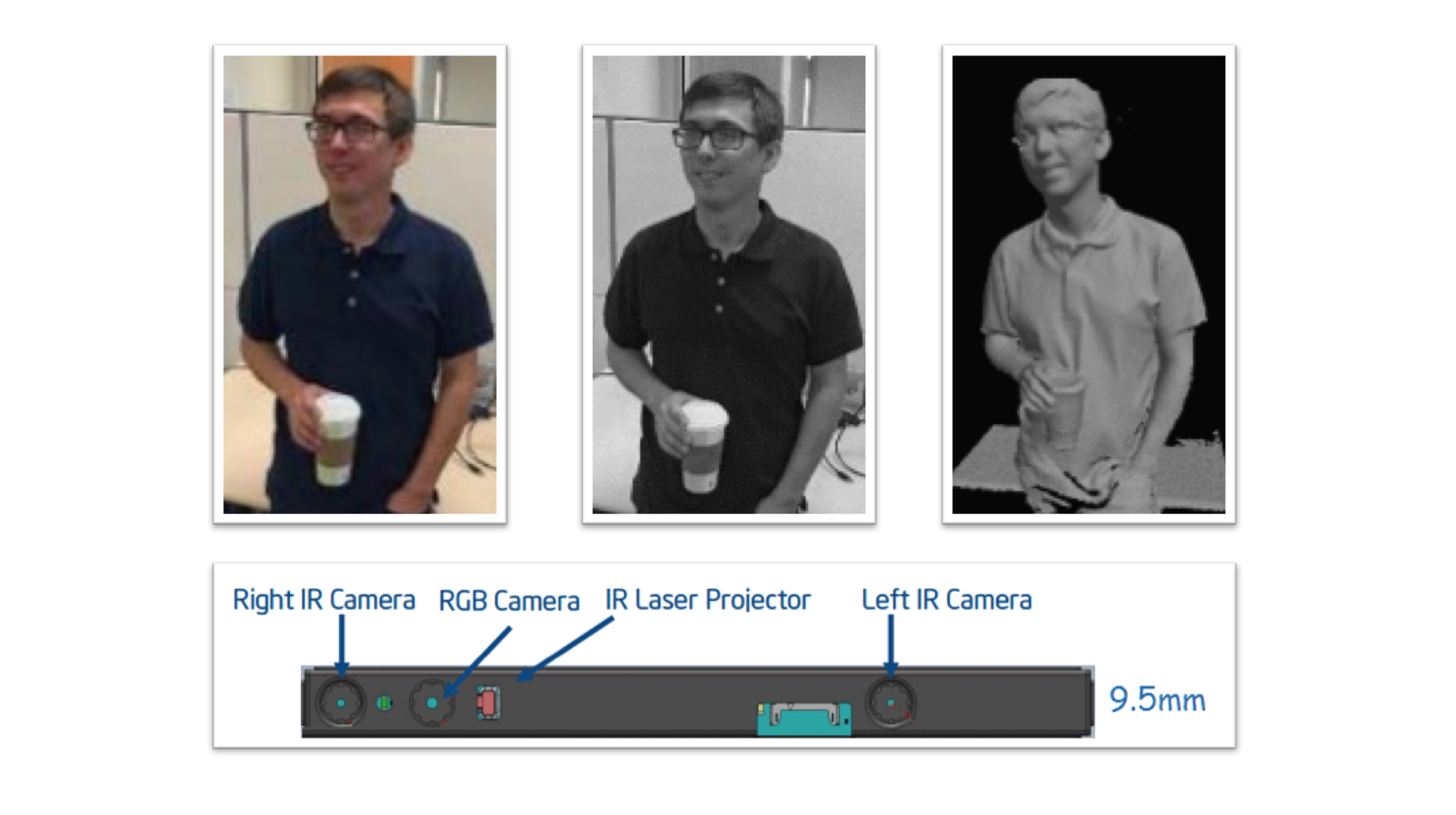

So how does this particular wearable technology work? The device uses a depth sensing camera, battery, and computer to create a 3-D image of the environment in a 40 degree vertical by 50 degree horizontal visual field and within a range of 0.5 to 4.0 meters. An infrared laser beam is projected upon the environment and then the stereoscopic array recorded by the right and left infrared cameras allows triangulation of the points and creation of a 3-D image of the environment, which is then overlaid on a standard red, green, blue camera image and the image is divided into 8 separate sections.  The computer receives this image, and then wirelessly transmits the signal of the whereabouts of various objects in 3D space to eight small boxes, six of which are worn within the subject’s undershirt and the remaining two on each ankle. Each of these boxes then vibrates with increasing intensity as objects within that section become closer to the user.

The computer receives this image, and then wirelessly transmits the signal of the whereabouts of various objects in 3D space to eight small boxes, six of which are worn within the subject’s undershirt and the remaining two on each ankle. Each of these boxes then vibrates with increasing intensity as objects within that section become closer to the user.

Video Examples and Obstacle Course

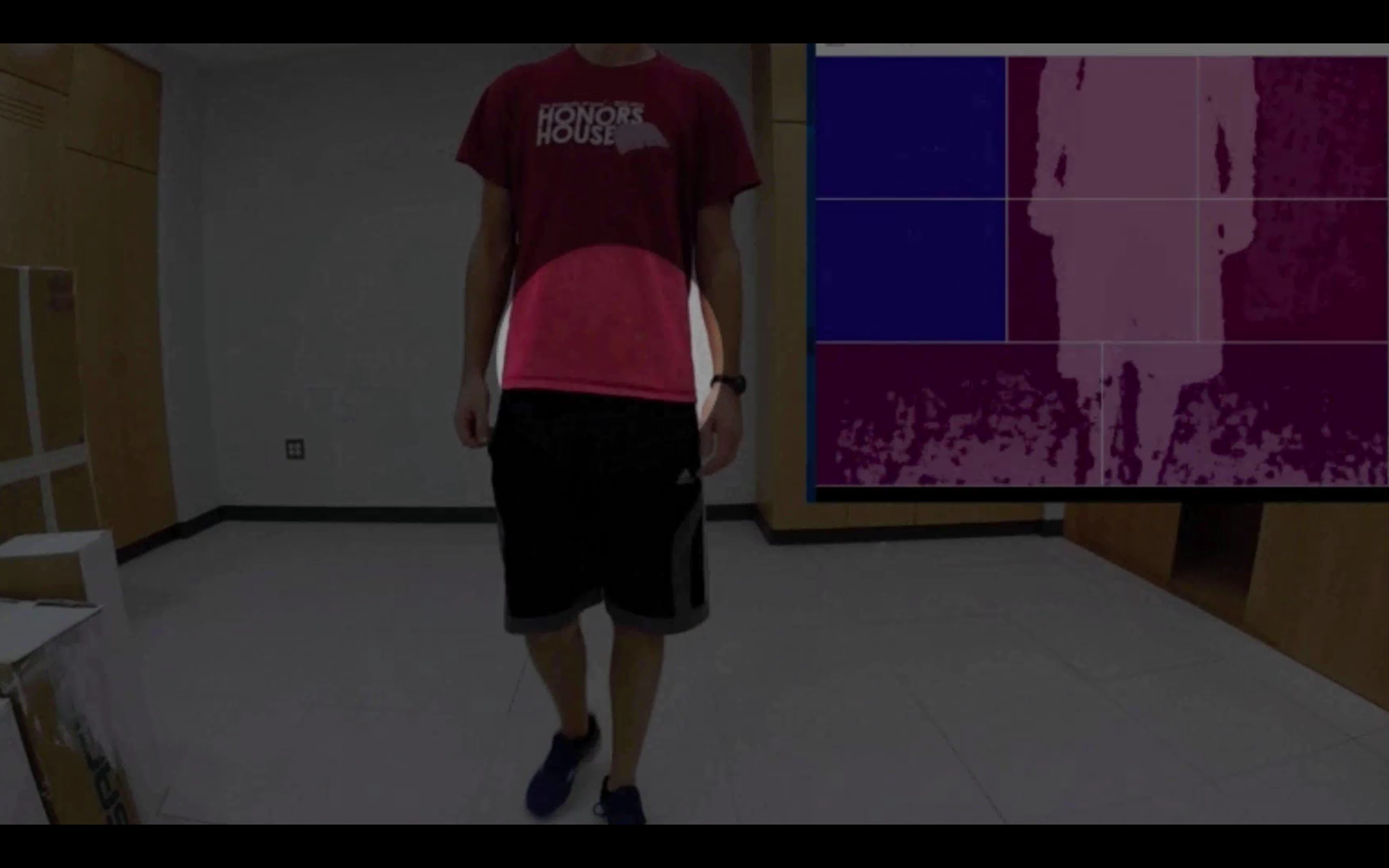

In the linked YouTube video above you will see one of our engineering students walking in front of this device and simulates how a patient with poor peripheral vision may benefit from its use. The bright central circle represents the user’s approximately 10 degrees of remaining visual field, and the red-blue colored map demonstrates how the various boxes vibrate as objects move within each section of the camera’s view.

Also in the video you will see what it might be like to blindly navigate a large room with multiple obstacles using only this device. Remember that the changing colors seen on the right represent the variations in distance from each obstacle which are then transmitted to each box in varying intensities as the object in that area of the visual field gets closer.

Project Status

So what is the status of this project? We are currently working with Intel to improve the system architecture, software, and functionality, and now have IRB-approval to assess the performance and usefulness of the device, with several subjects already enrolled in qualitative testing.

Most recently we have been working to improve comfort and functionality using the device and have created a 3D printed attachment to the GoPro camera chest mount and are also developing several quantitative tests of the device’s distance and visual field capabilities.

Conclusion

In conclusion, we are optimistic that this device has the potential to become a wearable, non-invasive alternative for patients with advanced peripheral visual field loss.

Acknowledgements

Many thanks to Dr. Stephen Russell at the University of Iowa Department of Ophthalmology and to the team of University of Iowa engineering students. And a special thanks to Darryl Adams and his colleagues at Intel Corporation for their dedication to innovation, research, and ophthalmology. For more information on the Intel RealSense 3D camera technology, visit the links below.

CES 2015 Intel Keynote: Intel RealSense Technology as an Aid for the Visually Impaired

Thanks for sharing very useful post i enjoyed your blog reading…